|

I see three areas that this project could evolve towards:

1) Group laptop improvisations – where the samples generated in the studio are shared among all performers in either free improvisation, or with each laptop performer designated a role (e.g. beats, melody, bass/drone, effects processing). The collective output of these performances could then easily be recorded as polished album pieces. A system for recording synchronization between all laptops could easily be implemented over a wireless network (these files would then have to be mixed down), or an instant master recording could be made through the PA mixer. 2) Laptops with shared samples (as above) blended with live processing of acoustic instruments via microphones. In this case the recording space and quality of microphones would become an important factor as to the quality of the final product – a limiting factor that a purely laptop ensemble would not have. 3) The final logical extension of this is not only processing the live audio stream, but also capturing and manipulating fragments of it in real-time during, and as part of, the performance. With software custom designed for this purpose, both the individual sample fragments and the master output of each laptop could be saved at the end of the recording session – thus resulting in instant polished pieces of music with little or no post-production necessary, as well as generating processed collections of samples that would require little or no editing. This third option would ideally be undertaken by ensembles that play together regularly, so that a feedback loop of samples from previous sessions could inform the development of future sessions. I felt like testing the portability, and making music in a garden. So I made one of the pieces here at the Adelaide Botanic Gardens, sitting on a log. Actually worked really well.

I've decided to finish this phase tomorrow with one last piece - totaling 12 in 12 days. Hardware:

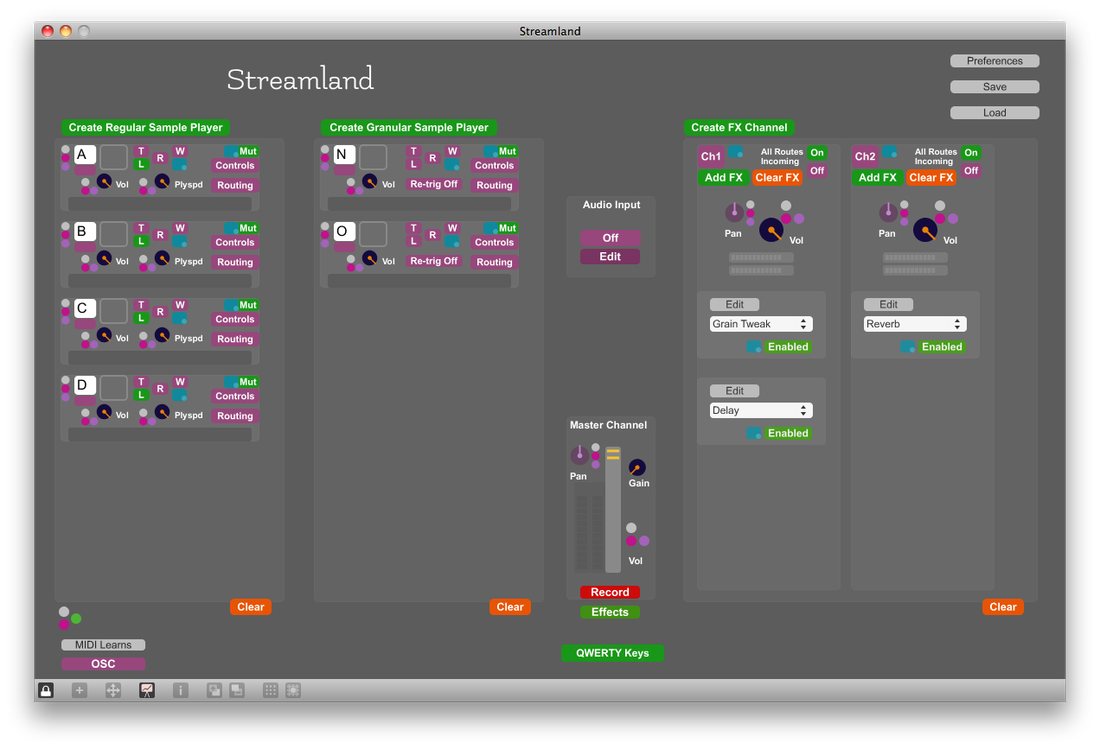

-Headphones - Sennheiser HD25SP -Laptop - MacBook Pro - 2Ghz Intel Core i7 - Mac OSX 10.6 -MIDI Controllers - Icon iControl, M-Audio Oxygen 61, Korg NanoKontrol2 (pictured) Software: -Audio Finder -Streamland - custom-built in Max 5 - with thanks to the work of Timo Rozendal for the grainstretch~ object As the process has evolved, I've explored the idea of a fixed improvisation setup - i.e. in the Streamland instrument, having several sample slots and effects pre-loaded and pre-MIDI-mapped. This way, at least a core set of controls could get into my muscle-memory, and I could develop a more immediate and focused auditory-tactile relationship, relying less on my eyes.

What eventually evolved was not to have anything pre-mapped, but rather to just develop a flexible consistency. This generally involved: -sample on-off triggers and sample reverse buttons in the same place on the QWERTY keyboard each time -volume faders mapped to the same or similar places (depending on what samples need a fader, and what could be grouped together on a single fader) -core effects mappings - for the basic effects setup that has been used almost everytime, and sometimes augmented with additional effects - dials mapped to delay time, delay feedback, reverb room size This would typically result in the entire control setup consisting of approximately: -20 QWERTY keys -8 faders -4 dials -and the laptop track-pad This setup is also completely portable - MIDI mappings were done with an Icon iControl, and I've now switched to a Korg NanoKontrol. Apart from this, just a laptop and headphones. Brief notes from two of the studio improvisors. They were asked to discuss their involvement in the studio process after being played some of my laptop improvisations:

Dan Thorpe I played to Al’s work, and played to what I thought he’d want, but then went beyond that also. I played to create something that was already complete, I thought of the recording session as one whole piece. Sampling is interesting because you take something that is complete and make something new out of it. And though it's complete, you can take something from it, without making it any less complete. Meredith Lane Listening to a sample of myself used in a piece feels removed from how I remembered playing, but I don’t feel precious about being recontextualized. The studio recording process was helpful, even though I consider the material Al's property and not mine, it was interesting to see how I’d play in that context. I was unfamiliar with being recorded in a studio, so I thought about playing in a different way - I tried to vary the session as much as possible, but felt like I got trapped in the nuances of my own style. I’m considering using the elements of the recording for my own work, but perhaps re-recording them myself. Notes for refinement based on the improvisation process so far:

-often leaving loops playing for too long -most of the first 6 recordings did not feature much prominent performative FX tweaking, sample micro-looping, granular-suspension -tended to either be spacey and fragmented, or totally reliant on loop sync. Would like a balance of both -need to do some pre-preparation of ‘sections’ of musical material, so can easy change from one to the next and stay in flow -often turning up high pitched samples too loud for too long, they generally don’t need to be as loud as the rest -volume dynamics always slow, or immediate cuts. Other dynamics speeds, and techniques like swells and fluctuations -not using any panning techniques – static or dynamic – on samples or FX -not being bold with FX, not experimenting with new techniques and combinations -apart from glitch sounds, otherwise all high fidelity – explore low fidelity in other aspects – perhaps process samples in Audition, or get some of the dirtier mixdowns, and explore the textures of noise floors, etc -reveal the inner working of the technology through the music – travel further away from sounding like acoustic recordings -lack of rhythmic samples and combined samples in the collection – do a few Audition sessions of editing together rhythms and combining rhythms of different instruments together An interesting psychological affect is revealed for me through this way of making music.

As I'm improvising, my focus becomes much more intense. Sampling has always been a process of construction for me in the past, where I could take as long as needed and keep tweaking every little nuance. But now, once the recording button is on, I just have to put myself into it, and if I make a mistake, too bad. I have been tempted already to do post production fixes, but for this phase at least, I've decided to be purist about it while my skills develop. In the Streamland instrument, recordings are limited to only the stereo master track, there is no multi-track recording, so most errors can't be fixed even if I wanted to. I've found this to be very liberating - it gets me deeper into the moment of creation, and it doesn't add to the psychological weight of unfinished material that so often occurs in my music production. And so there's that paradox that through proper discipline comes true spontaneity. When I started creating my own instruments two years ago, I often came back to the idea of the sand mandala - a process of creating a beautiful and intricate artwork, that is simply swept away at the end. I toyed with the idea of making instruments that had no save or record functions - you have to be in the moment with the music as you create it, and when the moment's gone, it's gone. But when it came to it - I couldn't let go... So instead, this is where that idea has brought me. I began my laptop improvisations in an unfinished prototype Max instrument, which was an evolution of one of my older instruments - Square Bender. As I zeroed in on the improvisation phase, I decided that this would be the only instrument I'd use - so as not to dilute my performative focus. So I named this instrument Streamland and has now undergone a series of transformations as the improvisations unfolded: -sample muting that retains loop position - so samples can be muted without going out of sync or being retriggered -phase offset of synced loops - shift the position at which synced loops intersect. This retains uniform loop lengths, but can offset start position of each sample -loop syncing in reverse, and loop syncing for selections of sample fragments (i.e. keeping the loop synced when only a part of the sample is selected, not the whole thing) - this was essentially just a bug that needed fixing. The grain-stretched loop syncing is not very tight, but it has an organic glitchy charm -added MIDI and QWERTY keyboard pitch control (for non-granular playback only) - this is old-school pitch shifting without time-stretching. Although I could alter playback speed before, there was no way to land accurately on a pitch -added portamento to pitch control – slide between sample playspeeds/pitches. A fine addition to the record-player-style repertoire of performative controls -added synchronization for non-grain player (non-pitch correcting) - I added this mainly for syncing non-pitched material (e.g. percussive loops or noise) without granular distortion. The granular syncing wasn't tight enough for some purposes, and the non-pitch correction in this addition often adds richness to the sound-world -added reversing to MIDI note control of samples - so MIDI note can set playspeed in forwards or reverse -added MIDI button mapping for sample triggering and reversing - this frees up QWERTY keys for pitch control (MIDI note input) [note: this instrument is not available to the public yet, but should be within the next 6 months] I mentioned earlier on that I was considering a way of classifying and finding the samples in future iterations as this project grew. I actually found that after doing only two or three improvisations I needed something immediately - there were too many samples and navigating through them was a case of random hit and miss.

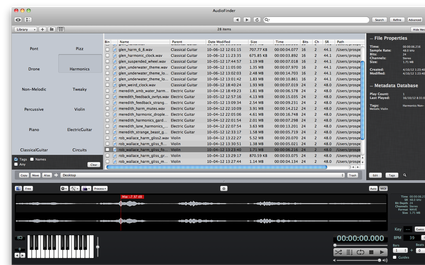

So I discovered Audio Finder which has an amazingly efficient way of tagging samples by multiple category. This has become an essential element to my improvisations - I can search for instrument type, technique, rhythm, etc in Audio Finder, then drag-drop straight from there into my Max performance instrument A few things regarding the scope of the project that I've been considering and/or adjusting along the way. These are as much for me to keep track of future iterations of this project as much as anything else.

1) I had the idea early on to create visual artworks that I'd bring to the studio that the performers would look into while improvising. I'd then use these same artworks when I did my laptop improvisations - to explore a kind of atmospheric perceptual consistency. I didn't explore this due to the time and expenses involved but I'd still like to in the future 2) Whether or not to include my own samples (i.e. those that I had created / performed myself) into the sample bank has been an ongoing question. At first I saw no problem with it, but after beginning my improvisations I discovered how far outside my comfort zone I was forced by not doing so. I recorded one improvisation with a sampled beat (a Native Instruments sample, so not really in the spirit of this project), and although the piece still resonated with me, it didn't feel as liberating as the others, where non-reliance on the grounding of a sampled beat necessitated much more structural ingenuity and spontaneity 3) I've been grounding this research in the concept of "group mind" improvisation, but in an asynchronous sense - where we are improvising together, but at different points in time. What this lacks is the feedback from me to the performers - it's only a one-way dialogue, me responding to them. Some ideas were proposed by my peers, such as playing my improvisations to a second round of studio performers and getting their musical responses to that, etc. This kind of feedback mechanism may also be developed in future iterations |

RSS Feed

RSS Feed