|

For a some time now I've had the long-term goal of creating a recording and sample-production setup that I could travel with, to be able to go anywhere in the world and record, sample and produce music - to experience a variety of cultures, atmospheres, people, instruments, musical styles, etc.

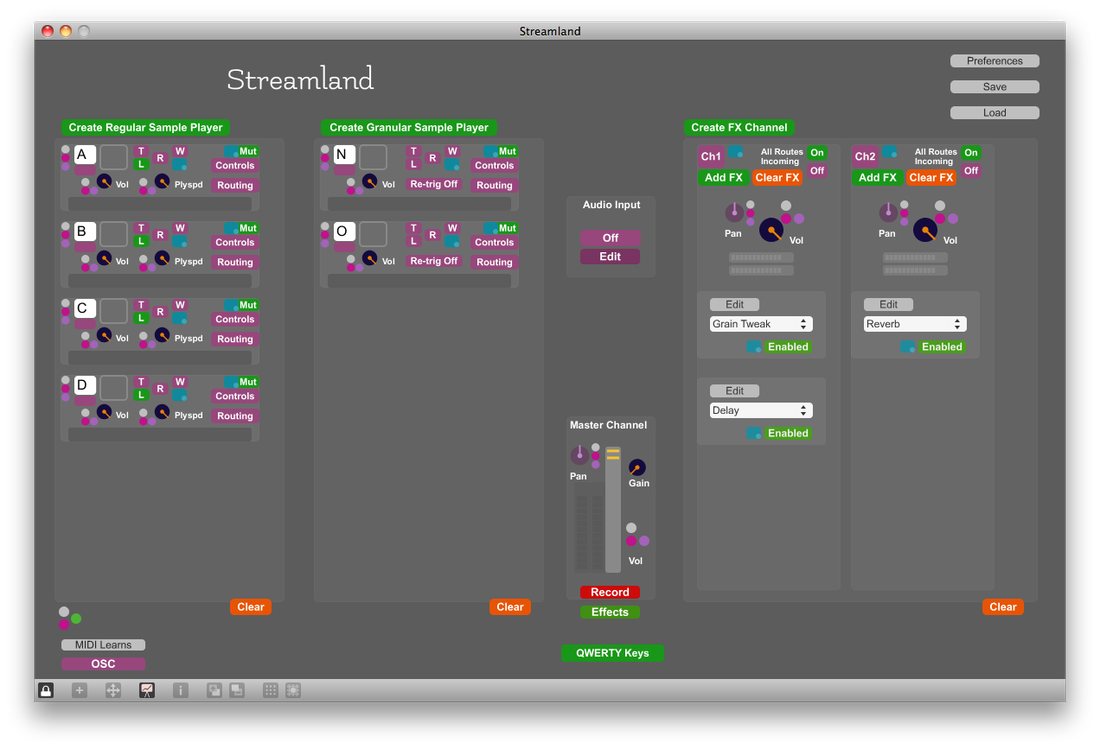

What interests me most about this idea is not just the recording interactions, but creating the music while in those places, and getting a performance/sampling feedback loop happening. Although this idea is not related to Streamland as it currently exists, nonetheless Streamland has laid the groundwork for at least the traveling production setup - laptop, headphones and NanoKontrol. A few compact portable mics and a compact mic stand are all that would be needed to fulfill the sampling aspect. Using USB mics could eliminate the need for an audio interface. Ideally, this is what I'd love to guide the Streamland concept towards. Hardware:

-Headphones - Sennheiser HD25SP -Laptop - MacBook Pro - 2Ghz Intel Core i7 - Mac OSX 10.6 -MIDI Controllers - Icon iControl, M-Audio Oxygen 61, Korg NanoKontrol2 (pictured) Software: -Audio Finder -Streamland - custom-built in Max 5 - with thanks to the work of Timo Rozendal for the grainstretch~ object As the process has evolved, I've explored the idea of a fixed improvisation setup - i.e. in the Streamland instrument, having several sample slots and effects pre-loaded and pre-MIDI-mapped. This way, at least a core set of controls could get into my muscle-memory, and I could develop a more immediate and focused auditory-tactile relationship, relying less on my eyes.

What eventually evolved was not to have anything pre-mapped, but rather to just develop a flexible consistency. This generally involved: -sample on-off triggers and sample reverse buttons in the same place on the QWERTY keyboard each time -volume faders mapped to the same or similar places (depending on what samples need a fader, and what could be grouped together on a single fader) -core effects mappings - for the basic effects setup that has been used almost everytime, and sometimes augmented with additional effects - dials mapped to delay time, delay feedback, reverb room size This would typically result in the entire control setup consisting of approximately: -20 QWERTY keys -8 faders -4 dials -and the laptop track-pad This setup is also completely portable - MIDI mappings were done with an Icon iControl, and I've now switched to a Korg NanoKontrol. Apart from this, just a laptop and headphones. I began my laptop improvisations in an unfinished prototype Max instrument, which was an evolution of one of my older instruments - Square Bender. As I zeroed in on the improvisation phase, I decided that this would be the only instrument I'd use - so as not to dilute my performative focus. So I named this instrument Streamland and has now undergone a series of transformations as the improvisations unfolded: -sample muting that retains loop position - so samples can be muted without going out of sync or being retriggered -phase offset of synced loops - shift the position at which synced loops intersect. This retains uniform loop lengths, but can offset start position of each sample -loop syncing in reverse, and loop syncing for selections of sample fragments (i.e. keeping the loop synced when only a part of the sample is selected, not the whole thing) - this was essentially just a bug that needed fixing. The grain-stretched loop syncing is not very tight, but it has an organic glitchy charm -added MIDI and QWERTY keyboard pitch control (for non-granular playback only) - this is old-school pitch shifting without time-stretching. Although I could alter playback speed before, there was no way to land accurately on a pitch -added portamento to pitch control – slide between sample playspeeds/pitches. A fine addition to the record-player-style repertoire of performative controls -added synchronization for non-grain player (non-pitch correcting) - I added this mainly for syncing non-pitched material (e.g. percussive loops or noise) without granular distortion. The granular syncing wasn't tight enough for some purposes, and the non-pitch correction in this addition often adds richness to the sound-world -added reversing to MIDI note control of samples - so MIDI note can set playspeed in forwards or reverse -added MIDI button mapping for sample triggering and reversing - this frees up QWERTY keys for pitch control (MIDI note input) [note: this instrument is not available to the public yet, but should be within the next 6 months] I mentioned earlier on that I was considering a way of classifying and finding the samples in future iterations as this project grew. I actually found that after doing only two or three improvisations I needed something immediately - there were too many samples and navigating through them was a case of random hit and miss.

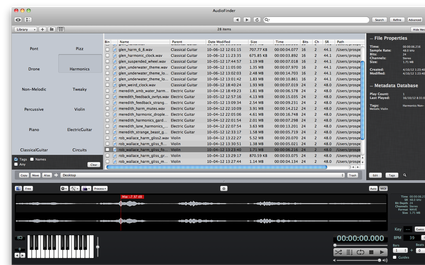

So I discovered Audio Finder which has an amazingly efficient way of tagging samples by multiple category. This has become an essential element to my improvisations - I can search for instrument type, technique, rhythm, etc in Audio Finder, then drag-drop straight from there into my Max performance instrument I shifted from my original sample editing plan - to use my software Wave Exchange to cut and process the samples - to instead continue to use Audition. Although Wave Exchange is much better for dynamic effects processing, I quickly realized that I could save most of that for the laptop improvisation phase, as simply editing the mixdowns into useable fragments was already a huge task. To give an example - a 10 minute recording yielded about 50 separate samples - equaling hours of post-production work of the stifling non-performative variety.

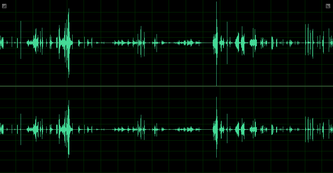

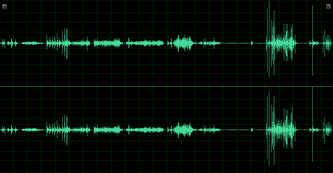

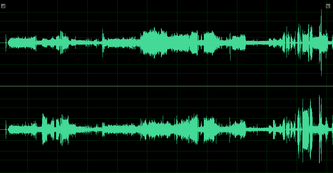

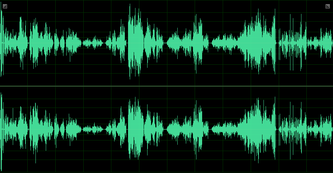

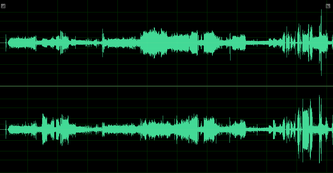

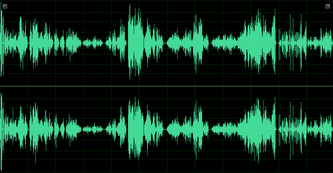

I since realized that a system could be easily devised which would allow me to process recordings into separate samples on the fly in the studio – at the same time the performer was playing: 1) I custom design software that would essentially provide me with as many separate audio buffers as I needed (creatable at the press of a button) which could be individually recorded to – with or without effects, and the effects could be manipulated by me (or potentially by the performer) in real-time as the performer was playing 2) I sit in the control room and signal to the performer in the studio to start, stop, fade in fade out, etc - in order to synchronize my recording with their separate gestures 3) At the end of the session, each separate buffer can be saved individually, and each of these samples would require minimal editing. 4) Thus musical gestures are immediately captured as individual samples, and the recording session becomes a collaborative improvisation. So later that day I modified one of my software instruments “Strange Creatures” to suit this purpose. With this, recording separate gestures to different buffers with input effects can be done with minimal setup, thus not overly interrupting the performative flow. Although this software won’t be used in this Uni phase of the project, I plan on using it for future collaborative Streamland studio sessions. Above are the waveforms for each studio session. All recordings were done in Pro Tools HD at the Elder Conservatorium's Electronic Music Unit and mixed-down in Adobe Audition.

I've included these images because they give an instant insight into of the performers' responses to the idea of improvising with "breathing space" between musical gestures... Some could clearly flow out of the silence into a gesture, then roll off into silence again... Some climbed onto an idea, rode it for a while, then climbed off, stopped, and climbed onto the next one... Some transformed a continuous stream of sound-world... ...and some of the performers moved between all these ways of playing. Audio Processing Initially Audition was used for the mixdowns simply because I didn't have Pro Tools on my laptop, but I also found that it provided a much smoother workflow for managing the intricacies of waveform editing. There was a long process of editing out extraneous sounds in the circuit recording and mic clipping in the electric guitar recording. This was done quickly and roughly (as the second phase of sample editing will be intricate and precise), but Audition seamed the sounds together with exceptional ease. Both the circuit and piano recordings were processed with Audition's Noise Reduction. As discussed below, this resulted in audible frequency erosions in the circuit recording, but seems to have cleaned up a hum in the piano recording incredibly well, with no audible degradation. The waveforms were normalized, but no effects processing will be done until the next phase where they are edited into sample fragments ready for performance. 11'13" of improvisation

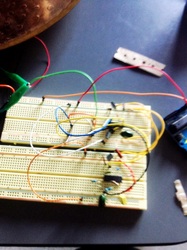

This was a very unusual recording session to manage. Iran ingeniously output the sounds produced by the circuit to a piezo speaker taped to a drum cymbal, creating amazingly bright resonances. The improvisation was a process of building and modifying the circuit during the recording. The sounds she created were imaginatively subtle and delicate. The cymbal was miked up (see below for specs), but the resulting output was very quiet. Also, the rattles and rustles of the circuit being built had to be edited out (though some of the more subtle ones were retained for character). This worked well, as I cut off the attacks of many of these sounds, leaving mysterious sweeps of noise through the mix. I also kept a few short passages of knocks and rustles when there was no circuit noise, so I could use them to create rhythmic loops. A noise reduction process in Adobe Audition was applied to the mixdown to remove the post-normalization room ambiance due to the quietness of the recording. This resulted in audible 'erosions' of the frequency spectrum, but these are to my ear quite a beautiful addition to the vibrant and playfully chirping circuit sounds. Due to the nature of the circuitry, breathing space between musical gestures was not really a performative option for Iran. Thus, as was the case with the violin session, a different approach to sample processing and performance will have to be taken...and my mind further opened. Absence of a tuning system in the circuit will also affect how these samples can be used alongside other instruments. Recording Space: Elder Conservatorium -- EMU dead-room Software: Pro Tools HD Adobe Audition CS6 Equipment: circuitry -- see photos above Rode NT5 Stereo pair -- 4 inches high, diagonally down and in towards cymbal Rode NT2000 -- 4 inches high, diagonally down towards cymbal 17'16" of improvisation

This bold session began with beautiful volume and feedback swells, providing long pronounced gestures that are both sonically rich and musically simple, and eminently useful as raw sample material. These were counterpointed by a range of dense, sweet and savage moments of introspection and electric magnetic fury. Recording Space: Elder Conservatorium -- EMU space Software: Pro Tools HD Equipment: Fender Hot Rod Deluxe Guitar Amp Rode NT2000 Stereo pair -- 1m high, diagonally down and in towards amp Rode NT5 Stereo pair -- 1.5m high, diagonally down and in towards amp Shure SM58 -- very close to amp, slightly offset 11'56" of improvisation

Long winding passages of this recording will make it more difficult to edit into the kind of sonic fragments I'm used to working with, but this is the nature of the project, which will force me to come up with new ways of handling the material. The consideration this poses is -- in the future, do I ask a performer to be more succinct with their gestures, or do I take the opportunity to adapt and expand my own sample production & performance practices based on what they've given me? Probably a balance between these two factors is ideal, but I won't really know until I've sampled the recordings. Often some inherent synergy between the studio performance and the recording technique can make a sample an effortless joy to manipulate, regardless of its apparent lack of 'samplability'. Recording Space: Elder Conservatorium -- EMU Dead-room Software: Pro Tools HD Equipment: Rode NT2000 Stereo pair Rode NT3 -- diagonally pointing down at 45deg to centre of violin body |

RSS Feed

RSS Feed