|

An interesting psychological affect is revealed for me through this way of making music.

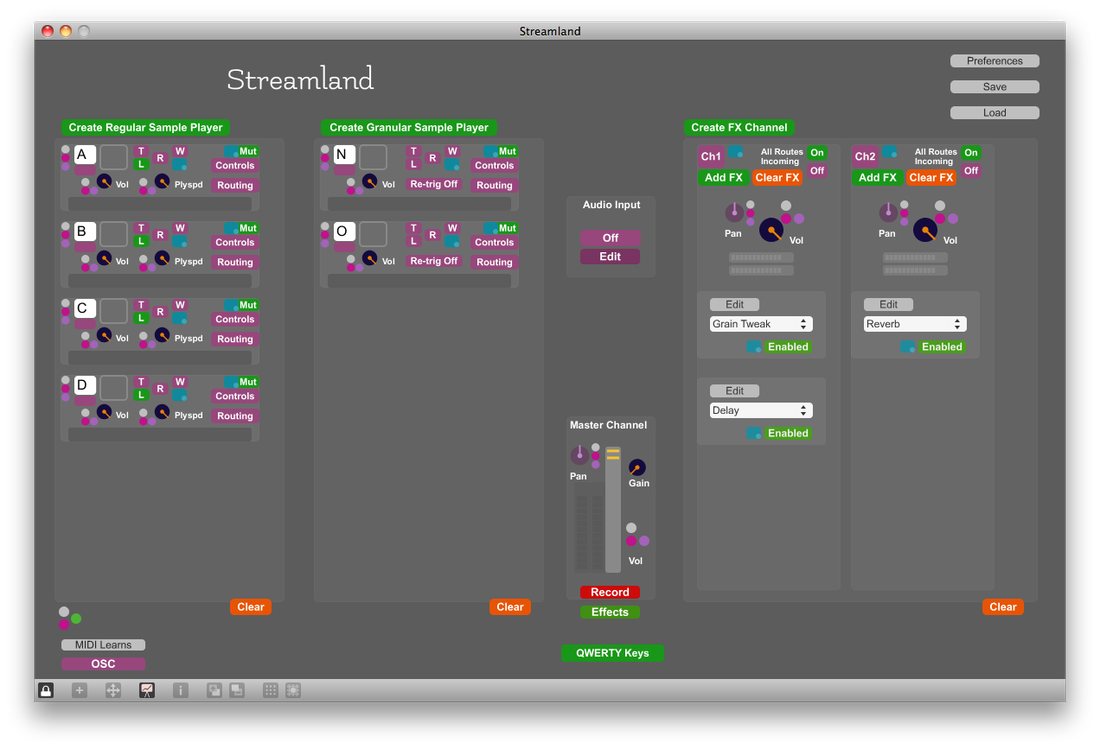

As I'm improvising, my focus becomes much more intense. Sampling has always been a process of construction for me in the past, where I could take as long as needed and keep tweaking every little nuance. But now, once the recording button is on, I just have to put myself into it, and if I make a mistake, too bad. I have been tempted already to do post production fixes, but for this phase at least, I've decided to be purist about it while my skills develop. In the Streamland instrument, recordings are limited to only the stereo master track, there is no multi-track recording, so most errors can't be fixed even if I wanted to. I've found this to be very liberating - it gets me deeper into the moment of creation, and it doesn't add to the psychological weight of unfinished material that so often occurs in my music production. And so there's that paradox that through proper discipline comes true spontaneity. When I started creating my own instruments two years ago, I often came back to the idea of the sand mandala - a process of creating a beautiful and intricate artwork, that is simply swept away at the end. I toyed with the idea of making instruments that had no save or record functions - you have to be in the moment with the music as you create it, and when the moment's gone, it's gone. But when it came to it - I couldn't let go... So instead, this is where that idea has brought me. I began my laptop improvisations in an unfinished prototype Max instrument, which was an evolution of one of my older instruments - Square Bender. As I zeroed in on the improvisation phase, I decided that this would be the only instrument I'd use - so as not to dilute my performative focus. So I named this instrument Streamland and has now undergone a series of transformations as the improvisations unfolded: -sample muting that retains loop position - so samples can be muted without going out of sync or being retriggered -phase offset of synced loops - shift the position at which synced loops intersect. This retains uniform loop lengths, but can offset start position of each sample -loop syncing in reverse, and loop syncing for selections of sample fragments (i.e. keeping the loop synced when only a part of the sample is selected, not the whole thing) - this was essentially just a bug that needed fixing. The grain-stretched loop syncing is not very tight, but it has an organic glitchy charm -added MIDI and QWERTY keyboard pitch control (for non-granular playback only) - this is old-school pitch shifting without time-stretching. Although I could alter playback speed before, there was no way to land accurately on a pitch -added portamento to pitch control – slide between sample playspeeds/pitches. A fine addition to the record-player-style repertoire of performative controls -added synchronization for non-grain player (non-pitch correcting) - I added this mainly for syncing non-pitched material (e.g. percussive loops or noise) without granular distortion. The granular syncing wasn't tight enough for some purposes, and the non-pitch correction in this addition often adds richness to the sound-world -added reversing to MIDI note control of samples - so MIDI note can set playspeed in forwards or reverse -added MIDI button mapping for sample triggering and reversing - this frees up QWERTY keys for pitch control (MIDI note input) [note: this instrument is not available to the public yet, but should be within the next 6 months] I mentioned earlier on that I was considering a way of classifying and finding the samples in future iterations as this project grew. I actually found that after doing only two or three improvisations I needed something immediately - there were too many samples and navigating through them was a case of random hit and miss.

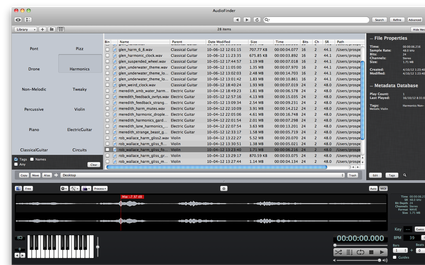

So I discovered Audio Finder which has an amazingly efficient way of tagging samples by multiple category. This has become an essential element to my improvisations - I can search for instrument type, technique, rhythm, etc in Audio Finder, then drag-drop straight from there into my Max performance instrument A few things regarding the scope of the project that I've been considering and/or adjusting along the way. These are as much for me to keep track of future iterations of this project as much as anything else.

1) I had the idea early on to create visual artworks that I'd bring to the studio that the performers would look into while improvising. I'd then use these same artworks when I did my laptop improvisations - to explore a kind of atmospheric perceptual consistency. I didn't explore this due to the time and expenses involved but I'd still like to in the future 2) Whether or not to include my own samples (i.e. those that I had created / performed myself) into the sample bank has been an ongoing question. At first I saw no problem with it, but after beginning my improvisations I discovered how far outside my comfort zone I was forced by not doing so. I recorded one improvisation with a sampled beat (a Native Instruments sample, so not really in the spirit of this project), and although the piece still resonated with me, it didn't feel as liberating as the others, where non-reliance on the grounding of a sampled beat necessitated much more structural ingenuity and spontaneity 3) I've been grounding this research in the concept of "group mind" improvisation, but in an asynchronous sense - where we are improvising together, but at different points in time. What this lacks is the feedback from me to the performers - it's only a one-way dialogue, me responding to them. Some ideas were proposed by my peers, such as playing my improvisations to a second round of studio performers and getting their musical responses to that, etc. This kind of feedback mechanism may also be developed in future iterations I have a clear vision for the aesthetic atmosphere I aim to create with Streamland - even the name itself is indicative of this imaginary place I have in mind.

It is influenced by a lot of music, but by a few specific albums and artists in particular: The Dirty Three - Whatever You Love, You Are Van Morrison - Astral Weeks DJ Shadow - Endtroducing The Books - Lost and Safe The Avalanches - Since I Left You Four Tet - Everything Ecstatic Piano works of Erik Satie The significance of Whatever You Love, You Are, Astral Weeks, and Satie in particular is their focus on liberating tempo constraints. In all three cases they play with concepts of loose, fluid performance that results in a much more flowing natural aesthetic, and for me as listener conjures a deeply immersive atmosphere. Synaesthetically, there is also a link between all these albums (and Satie) - the colour schemes that I perceive in them are generally lush natural textures - green and leafy - with the exception of Since I Left You which is more of a deep blue oceanic theme, as it's cover would suggest. Even the highly electronic Everything Ecstatic seems to conjure this immersely arboreal atmosphere - and provides for me a conceptual bridge of how to openly transition back and forth from the constructed collages of DJ Shadow to the performative dialoguing of The Dirty Three. Heading into the performance phase of my project, Kieran Hebden's improvisational style has always inspired me.

I had a beautiful moment once, dancing in my kitchen listening to Four Tet's Everything Ecstatic. I imagined that it was being improvised and I had a vision of some instrument that didn't exist, with which that kind of music could be performed all at once. That continues to be an evolving blue-print in my mind. The sample editing process was finally completed after many hours and days of intricate work.

Final count - 381 individual samples (gleaned from just under an hour of raw studio recordings). And that's just on the first pass. If I went over those recordings again, I could easily double that number. And it's interesting to consider what would happen if someone else edited samples out of those same recordings, how their interpretation would differ. Anyway, 381 samples is huge. That could keep me going for years. But in the interests of diversity, as I continue to develop this project in the future I aim to expand the array of instruments and personalities used. My consideration now is categorizing the samples for quick and easy discovery before and during performance. For now I just use the Mac Finder - where my samples are arranged by instrument type, but it is not at all ideal. I have heard rumour of someone developing a visual system where samples can appear as nodes that float around, and can be clumped together based on meta-data or audio analysis -- for example instrument type, melodic/rhythmic, sample length, loudness, timbral characteristics etc. So you select one of these elements from a menu, and a cluster of relevant samples forms. Ideally then, I'd just drag and drop these sample-nodes into my instrument and play. I shifted from my original sample editing plan - to use my software Wave Exchange to cut and process the samples - to instead continue to use Audition. Although Wave Exchange is much better for dynamic effects processing, I quickly realized that I could save most of that for the laptop improvisation phase, as simply editing the mixdowns into useable fragments was already a huge task. To give an example - a 10 minute recording yielded about 50 separate samples - equaling hours of post-production work of the stifling non-performative variety.

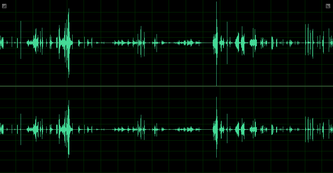

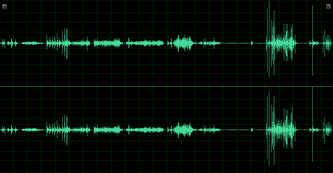

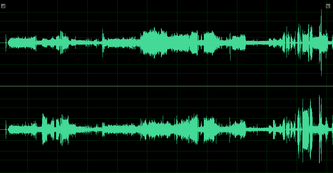

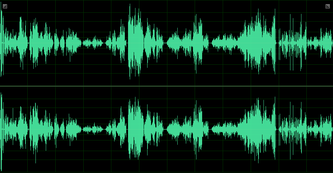

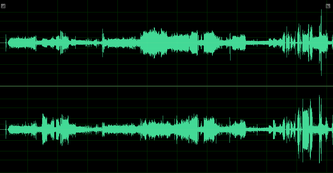

I since realized that a system could be easily devised which would allow me to process recordings into separate samples on the fly in the studio – at the same time the performer was playing: 1) I custom design software that would essentially provide me with as many separate audio buffers as I needed (creatable at the press of a button) which could be individually recorded to – with or without effects, and the effects could be manipulated by me (or potentially by the performer) in real-time as the performer was playing 2) I sit in the control room and signal to the performer in the studio to start, stop, fade in fade out, etc - in order to synchronize my recording with their separate gestures 3) At the end of the session, each separate buffer can be saved individually, and each of these samples would require minimal editing. 4) Thus musical gestures are immediately captured as individual samples, and the recording session becomes a collaborative improvisation. So later that day I modified one of my software instruments “Strange Creatures” to suit this purpose. With this, recording separate gestures to different buffers with input effects can be done with minimal setup, thus not overly interrupting the performative flow. Although this software won’t be used in this Uni phase of the project, I plan on using it for future collaborative Streamland studio sessions. Above are the waveforms for each studio session. All recordings were done in Pro Tools HD at the Elder Conservatorium's Electronic Music Unit and mixed-down in Adobe Audition.

I've included these images because they give an instant insight into of the performers' responses to the idea of improvising with "breathing space" between musical gestures... Some could clearly flow out of the silence into a gesture, then roll off into silence again... Some climbed onto an idea, rode it for a while, then climbed off, stopped, and climbed onto the next one... Some transformed a continuous stream of sound-world... ...and some of the performers moved between all these ways of playing. Audio Processing Initially Audition was used for the mixdowns simply because I didn't have Pro Tools on my laptop, but I also found that it provided a much smoother workflow for managing the intricacies of waveform editing. There was a long process of editing out extraneous sounds in the circuit recording and mic clipping in the electric guitar recording. This was done quickly and roughly (as the second phase of sample editing will be intricate and precise), but Audition seamed the sounds together with exceptional ease. Both the circuit and piano recordings were processed with Audition's Noise Reduction. As discussed below, this resulted in audible frequency erosions in the circuit recording, but seems to have cleaned up a hum in the piano recording incredibly well, with no audible degradation. The waveforms were normalized, but no effects processing will be done until the next phase where they are edited into sample fragments ready for performance. 11'13" of improvisation

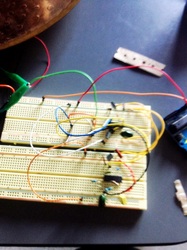

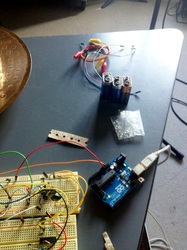

This was a very unusual recording session to manage. Iran ingeniously output the sounds produced by the circuit to a piezo speaker taped to a drum cymbal, creating amazingly bright resonances. The improvisation was a process of building and modifying the circuit during the recording. The sounds she created were imaginatively subtle and delicate. The cymbal was miked up (see below for specs), but the resulting output was very quiet. Also, the rattles and rustles of the circuit being built had to be edited out (though some of the more subtle ones were retained for character). This worked well, as I cut off the attacks of many of these sounds, leaving mysterious sweeps of noise through the mix. I also kept a few short passages of knocks and rustles when there was no circuit noise, so I could use them to create rhythmic loops. A noise reduction process in Adobe Audition was applied to the mixdown to remove the post-normalization room ambiance due to the quietness of the recording. This resulted in audible 'erosions' of the frequency spectrum, but these are to my ear quite a beautiful addition to the vibrant and playfully chirping circuit sounds. Due to the nature of the circuitry, breathing space between musical gestures was not really a performative option for Iran. Thus, as was the case with the violin session, a different approach to sample processing and performance will have to be taken...and my mind further opened. Absence of a tuning system in the circuit will also affect how these samples can be used alongside other instruments. Recording Space: Elder Conservatorium -- EMU dead-room Software: Pro Tools HD Adobe Audition CS6 Equipment: circuitry -- see photos above Rode NT5 Stereo pair -- 4 inches high, diagonally down and in towards cymbal Rode NT2000 -- 4 inches high, diagonally down towards cymbal |

RSS Feed

RSS Feed